今日论文合集:cs.SD语音7篇,eess.AS音频处理10篇。

【1】 A Preliminary Investigation on Flexible Singing Voice Synthesis Through Decomposed Framework with Inferrable Features

链接:https://arxiv.org/abs/2407.09346

备注:Preliminary investigations

摘要:我们研究的可行性歌唱声音合成(SVS)系统,通过使用一个分解的框架,以提高灵活性,在生成歌唱的声音。由于数据驱动的方法,SVS执行乐谱到波形的映射;然而,直接映射限制了控制,例如只能在标记的歌唱数据集中合成语言或歌手。由于收集带有乐谱标记的大型歌唱数据集是一项昂贵的任务,我们通过分解SVS系统并推断不同的歌唱声音特征来研究另一种方法。我们将SVS系统分解为语言,音高轮廓和合成三个阶段的模块,其中唱歌的声音特征,如语言内容,F0,有声/清音,歌手嵌入和响度直接从音频中推断。通过这个分解的框架,我们表明我们可以减轻标记数据集的要求,适应不同的语言或歌手,并对歌声的抒情内容进行补绘。我们的调查表明,尽管该模型具有额外的功能和更高的灵活性,但该框架有潜力在SVS中达到最先进的水平。对我们研究框架当前功能的全面分析揭示了研究界实现灵活且多功能的SVS系统的方式。

摘要:We investigate the feasibility of a singing voice synthesis (SVS) system by using a decomposed framework to improve flexibility in generating singing voices. Due to data-driven approaches, SVS performs a music score-to-waveform mapping; however, the direct mapping limits control, such as being able to only synthesize in the language or the singers present in the labeled singing datasets. As collecting large singing datasets labeled with music scores is an expensive task, we investigate an alternative approach by decomposing the SVS system and inferring different singing voice features. We decompose the SVS system into three-stage modules of linguistic, pitch contour, and synthesis, in which singing voice features such as linguistic content, F0, voiced/unvoiced, singer embeddings, and loudness are directly inferred from audio. Through this decomposed framework, we show that we can alleviate the labeled dataset requirements, adapt to different languages or singers, and inpaint the lyrical content of singing voices. Our investigations show that the framework has the potential to reach state-of-the-art in SVS, even though the model has additional functionality and improved flexibility. The comprehensive analysis of our investigated framework’s current capabilities sheds light on the ways the research community can achieve a flexible and multifunctional SVS system.

标题:使用RefinPaint进行音乐校对:在给定上下文的情况下在哪里以及如何修改作品

链接:https://arxiv.org/abs/2407.09099

摘要:None

摘要:Autoregressive generative transformers are key in music generation, producing coherent compositions but facing challenges in human-machine collaboration. We propose RefinPaint, an iterative technique that improves the sampling process. It does this by identifying the weaker music elements using a feedback model, which then informs the choices for resampling by an inpainting model. This dual-focus methodology not only facilitates the machine’s ability to improve its automatic inpainting generation through repeated cycles but also offers a valuable tool for humans seeking to refine their compositions with automatic proofreading. Experimental results suggest RefinPaint’s effectiveness in inpainting and proofreading tasks, demonstrating its value for refining music created by both machines and humans. This approach not only facilitates creativity but also aids amateur composers in improving their work.

标题:增强不完整数据中的情绪识别:一种新型的跨模式对齐、重建和细化框架

链接:https://arxiv.org/abs/2407.09029

摘要:多模态情感识别系统严重依赖模态的完全可用性,当模态数据不完整时,性能会显著下降。为了解决这个问题,我们提出了跨模态对齐,重建和细化(CM-ARR)框架,这是一种创新的方法,它依次进行跨模态对齐,重建和细化阶段,以处理丢失的模态并增强情感识别。该框架利用基于无监督分布的对比学习来对齐异构模态分布,减少差异并有效地建模语义不确定性。重建阶段应用归一化流模型来变换这些对齐的分布并恢复丢失的模态。细化阶段采用基于监督点的对比学习来破坏语义相关性并强调情感特征,从而丰富重构表示的情感内容。IEMOCAP和MSP-IMPROV数据集上的大量实验证实了CM-ARR在缺失和完整模态条件下的优越性能。值得注意的是,在六种缺失模式的情况下,CM-ARR在IEMOCAP数据集上实现了WAR 2.11%和UAR 2.12%的绝对改善,在MSP-IMPROV数据集上分别实现了WAR和UAR 1.71%和1.96%的绝对改善。

摘要:Multimodal emotion recognition systems rely heavily on the full availability of modalities, suffering significant performance declines when modal data is incomplete. To tackle this issue, we present the Cross-Modal Alignment, Reconstruction, and Refinement (CM-ARR) framework, an innovative approach that sequentially engages in cross-modal alignment, reconstruction, and refinement phases to handle missing modalities and enhance emotion recognition. This framework utilizes unsupervised distribution-based contrastive learning to align heterogeneous modal distributions, reducing discrepancies and modeling semantic uncertainty effectively. The reconstruction phase applies normalizing flow models to transform these aligned distributions and recover missing modalities. The refinement phase employs supervised point-based contrastive learning to disrupt semantic correlations and accentuate emotional traits, thereby enriching the affective content of the reconstructed representations. Extensive experiments on the IEMOCAP and MSP-IMPROV datasets confirm the superior performance of CM-ARR under conditions of both missing and complete modalities. Notably, averaged across six scenarios of missing modalities, CM-ARR achieves absolute improvements of 2.11% in WAR and 2.12% in UAR on the IEMOCAP dataset, and 1.71% and 1.96% in WAR and UAR, respectively, on the MSP-IMPROV dataset.

标题:使用非负张量分解和基于吸引子的正规化的音频点形成

链接:https://arxiv.org/abs/2407.08951

备注:Accepted at EUSIPCO2024

摘要:点形成是一种使用多个麦克风阵列的目标说话人提取技术。该方法将波束形成(BF)应用于每个麦克风阵列,并将BF输出之间的公共分量估计为目标源。提出了一种基于非负张量因子分解(NTF)的公共分量提取方法,以提高模型的可解释性和对超参数的鲁棒性。此外,基于吸引子的正则化,以促进自动选择的最佳目标基的NTF。实验结果表明,该方法具有比传统方法更好的光斑形成性能,并显示出一些适合于实际应用的特点。

摘要:Spotforming is a target-speaker extraction technique that uses multiple microphone arrays. This method applies beamforming (BF) to each microphone array, and the common components among the BF outputs are estimated as the target source. This study proposes a new common component extraction method based on nonnegative tensor factorization (NTF) for higher model interpretability and more robust spotforming against hyperparameters. Moreover, attractor-based regularization was introduced to facilitate the automatic selection of optimal target bases in the NTF. Experimental results show that the proposed method performs better than conventional methods in spotforming performance and also shows some characteristics suitable for practical use.

标题:知情FastICA:半盲最小方差无失真束形成器

链接:https://arxiv.org/abs/2407.09259

备注:accepted for IWAENC 2024

摘要:基于非高斯性的独立向量提取算法在正交约束条件下使用近似Newton-Raphson算法优化似然函数时,会导致著名的单单元FastICA/FastIVA算法。在本文中,我们取代的约束与最小方差无失真波束形成器(MVDR)的解析形式,通过它的FastICA/FastIVA的半盲变体。这里的边信息由加权协方差矩阵代替噪声协方差矩阵提供,噪声协方差矩阵的估计是神经波束形成器的常见目标。因此,该算法提供了一个直观的基于模型的盲提取和基于学习的提取之间的联系。该算法在仿真和说话人ID引导的说话人提取中进行了测试,显示出快速的收敛性和良好的性能。

摘要:Non-Gaussianity-based Independent Vector Extraction leads to the famous one-unit FastICA/FastIVA algorithm when the likelihood function is optimized using an approximate Newton-Raphson algorithm under the orthogonality constraint. In this paper, we replace the constraint with the analytic form of the minimum variance distortionless beamformer (MVDR), by which a semi-blind variant of FastICA/FastIVA is obtained. The side information here is provided by a weighted covariance matrix replacing the noise covariance matrix, the estimation of which is a frequent goal of neural beamformers. The algorithm thus provides an intuitive connection between model-based blind extraction and learning-based extraction. The algorithm is tested in simulations and speaker ID-guided speaker extraction, showing fast convergence and promising performance.

标题:迪夫-MST:差异化的混合风格转移

链接:https://arxiv.org/abs/2407.08889

备注:Accepted to be published at the Proceedings of the 25th International Society for Music Information Retrieval Conference 2024

摘要:混音风格转换通过从参考歌曲推断制作属性来自动生成给定曲目集的多曲目混音。然而,用于混合风格转移的现有系统受到限制,因为它们通常仅在固定数量的轨道上操作,引入伪像,并且以端到端的方式产生混合,而不以传统音频效果为基础,从而禁止可解释性和可控性。为了克服这些挑战,我们介绍了Diff-MST,一个框架,包括一个微分混音控制台,一个Transformer控制器,和一个音频制作风格的损失函数。通过输入原始曲目和参考歌曲,我们的模型可以在可区分的混音控制台内估计音频效果的控制参数,产生高质量的混音并进行事后调整。此外,我们的架构支持任意数量的输入轨道,而无需源标签,从而实现现实世界的应用程序。我们根据强大的基线评估模型的性能,并展示我们的方法,架构设计,定制的音频制作风格损失以及针对给定任务的创新培训方法的有效性。

摘要:Mixing style transfer automates the generation of a multitrack mix for a given set of tracks by inferring production attributes from a reference song. However, existing systems for mixing style transfer are limited in that they often operate only on a fixed number of tracks, introduce artifacts, and produce mixes in an end-to-end fashion, without grounding in traditional audio effects, prohibiting interpretability and controllability. To overcome these challenges, we introduce Diff-MST, a framework comprising a differentiable mixing console, a transformer controller, and an audio production style loss function. By inputting raw tracks and a reference song, our model estimates control parameters for audio effects within a differentiable mixing console, producing high-quality mixes and enabling post-hoc adjustments. Moreover, our architecture supports an arbitrary number of input tracks without source labelling, enabling real-world applications. We evaluate our model’s performance against robust baselines and showcase the effectiveness of our approach, architectural design, tailored audio production style loss, and innovative training methodology for the given task.

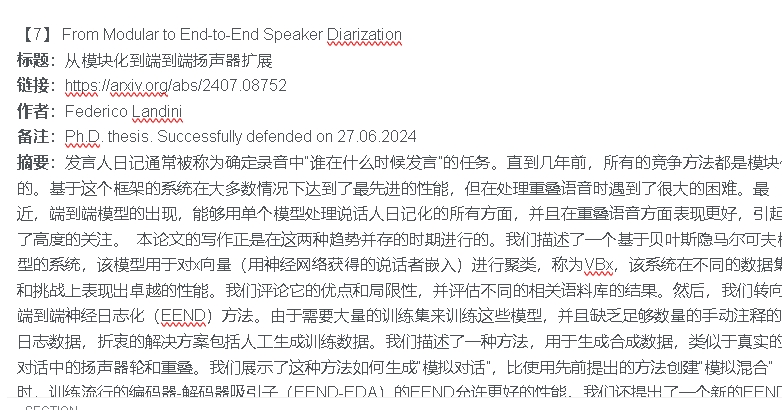

标题:从模块化到端到端扬声器扩展

链接:https://arxiv.org/abs/2407.08752

备注:Ph.D. thesis. Successfully defended on 27.06.2024

摘要:发言人日记通常被称为确定录音中“谁在什么时候发言”的任务。直到几年前,所有的竞争方法都是模块化的。基于这个框架的系统在大多数情况下达到了最先进的性能,但在处理重叠语音时遇到了很大的困难。最近,端到端模型的出现,能够用单个模型处理说话人日记化的所有方面,并且在重叠语音方面表现更好,引起了高度的关注。 本论文的写作正是在这两种趋势并存的时期进行的。我们描述了一个基于贝叶斯隐马尔可夫模型的系统,该模型用于对x向量(用神经网络获得的说话者嵌入)进行聚类,称为VBx,该系统在不同的数据集和挑战上表现出卓越的性能。我们评论它的优点和局限性,并评估不同的相关语料库的结果。然后,我们转向端到端神经日志化(EEND)方法。由于需要大量的训练集来训练这些模型,并且缺乏足够数量的手动注释的日志数据,折衷的解决方案包括人工生成训练数据。我们描述了一种方法,用于生成合成数据,类似于真实的对话中的扬声器轮和重叠。我们展示了这种方法如何生成“模拟对话”,比使用先前提出的方法创建“模拟混合”时,训练流行的编码器-解码器吸引子(EEND-EDA)的EEND允许更好的性能。我们还提出了一个新的EEND为基础的模型,我们称之为DiaPer,并表明它可以比EEND-EDA更好地执行,特别是在处理多个扬声器和处理重叠的语音。最后,我们在各种语料库上比较了基于VBx的系统和DiaPer系统,并评论了每种技术的优点。

摘要:Speaker diarization is usually referred to as the task that determines “who spoke when” in a recording. Until a few years ago, all competitive approaches were modular. Systems based on this framework reached state-of-the-art performance in most scenarios but had major difficulties dealing with overlapped speech. More recently, the advent of end-to-end models, capable of dealing with all aspects of speaker diarization with a single model and better performing regarding overlapped speech, has brought high levels of attention. This thesis is framed during a period of co-existence of these two trends. We describe a system based on a Bayesian hidden Markov model used to cluster x-vectors (speaker embeddings obtained with a neural network), known as VBx, which has shown remarkable performance on different datasets and challenges. We comment on its advantages and limitations and evaluate results on different relevant corpora. Then, we move towards end-to-end neural diarization (EEND) methods. Due to the need for large training sets for training these models and the lack of manually annotated diarization data in sufficient quantities, the compromise solution consists in generating training data artificially. We describe an approach for generating synthetic data which resembles real conversations in terms of speaker turns and overlaps. We show how this method generating “simulated conversations” allows for better performance than using a previously proposed method for creating “simulated mixtures” when training the popular EEND with encoder-decoder attractors (EEND-EDA). We also propose a new EEND-based model, which we call DiaPer, and show that it can perform better than EEND-EDA, especially when dealing with many speakers and handling overlapped speech. Finally, we compare both VBx-based and DiaPer systems on a wide variety of corpora and comment on the advantages of each technique.

标题:知情FastICA:半盲最小方差无失真束形成器

链接:https://arxiv.org/abs/2407.09259

备注:accepted for IWAENC 2024

摘要:基于非高斯性的独立向量提取算法在正交约束条件下使用近似Newton-Raphson算法优化似然函数时,会导致著名的单单元FastICA/FastIVA算法。在本文中,我们取代的约束与最小方差无失真波束形成器(MVDR)的解析形式,通过它的FastICA/FastIVA的半盲变体。这里的边信息由加权协方差矩阵代替噪声协方差矩阵提供,噪声协方差矩阵的估计是神经波束形成器的常见目标。因此,该算法提供了一个直观的基于模型的盲提取和基于学习的提取之间的联系。该算法在仿真和说话人ID引导的说话人提取中进行了测试,显示出快速的收敛性和良好的性能。

摘要:Non-Gaussianity-based Independent Vector Extraction leads to the famous one-unit FastICA/FastIVA algorithm when the likelihood function is optimized using an approximate Newton-Raphson algorithm under the orthogonality constraint. In this paper, we replace the constraint with the analytic form of the minimum variance distortionless beamformer (MVDR), by which a semi-blind variant of FastICA/FastIVA is obtained. The side information here is provided by a weighted covariance matrix replacing the noise covariance matrix, the estimation of which is a frequent goal of neural beamformers. The algorithm thus provides an intuitive connection between model-based blind extraction and learning-based extraction. The algorithm is tested in simulations and speaker ID-guided speaker extraction, showing fast convergence and promising performance.

标题:挤压激励ResNet Conformers,用于DUSE 2024挑战赛的声音事件定位、检测和距离估计

链接:https://arxiv.org/abs/2407.09021

备注:Technical report for DCASE 2024 Challenge Task 3

摘要:本技术报告详细介绍了我们为DCASE 2024挑战赛的任务3提交的系统:音频和视听声音事件定位和检测(SELD)与源距离估计(SELD)。在本报告中,我们仅讨论仅音频SELD(SELDDE)任务。我们建议使用挤压和激励块来改进现有的ResNet-Conformer架构,以便引入额外形式的通道和空间方面的注意力。为了提高SELD的性能,我们还利用了空间线索增强对数谱图(SALSA)的特点,在常用的对数梅尔谱特征的复调SELD。我们使用音频通道交换技术补充了现有的Sony-TAu Realistic Spatial Soundscapes 2023(STARSS 23)数据集,并使用SpatialScaper生成器合成了额外的数据。我们还执行距离缩放,以防止大的距离误差对损失函数的贡献更大。最后,我们评估我们的方法上的评估子集的STARSS 23数据集。

摘要:This technical report details our systems submitted for Task 3 of the DCASE 2024 Challenge: Audio and Audiovisual Sound Event Localization and Detection (SELD) with Source Distance Estimation (SDE). We address only the audio-only SELD with SDE (SELDDE) task in this report. We propose to improve the existing ResNet-Conformer architectures with Squeeze-and-Excitation blocks in order to introduce additional forms of channel- and spatial-wise attention. In order to improve SELD performance, we also utilize the Spatial Cue-Augmented Log-Spectrogram (SALSA) features over the commonly used log-mel spectra features for polyphonic SELD. We complement the existing Sony-TAu Realistic Spatial Soundscapes 2023 (STARSS23) dataset with the audio channel swapping technique and synthesize additional data using the SpatialScaper generator. We also perform distance scaling in order to prevent large distance errors from contributing more towards the loss function. Finally, we evaluate our approach on the evaluation subset of the STARSS23 dataset.

标题:通过高效量化技术优化基于DNN的说话人验证模型

链接:https://arxiv.org/abs/2407.08991

备注:in Korean language, Accepted at Society of Electronic Engineers of Korea Conference 2024

摘要:随着深度神经网络(DNN)在包括语音验证在内的各个领域的快速发展,它们通常涉及高计算成本和大量内存消耗,这可能对移动系统的管理构成挑战。深度模型的量化提供了一种减少计算和内存开销的方法。我们的研究提出了一个优化框架的量化的说话人确认模型。通过分析预先训练的说话人验证模型的每一层的性能变化和模型大小的减少,我们有效地减少了性能下降,同时显着减少了模型大小。我们的量化算法是第一次尝试保持最先进的预训练说话人验证模型ECAPATDNN的性能,同时显着压缩其模型大小。总的来说,我们的量化方法导致模型大小减少了一半,EER的增加限制在0.07%。

摘要:As Deep Neural Networks (DNNs) rapidly advance in various fields, including speech verification, they typically involve high computational costs and substantial memory consumption, which can be challenging to manage on mobile systems. Quantization of deep models offers a means to reduce both computational and memory expenses. Our research proposes an optimization framework for the quantization of the speaker verification model. By analyzing performance changes and model size reductions in each layer of a pre-trained speaker verification model, we have effectively minimized performance degradation while significantly reducing the model size. Our quantization algorithm is the first attempt to maintain the performance of the state-of-the-art pre-trained speaker verification model, ECAPATDNN, while significantly compressing its model size. Overall, our quantization approach resulted in reducing the model size by half, with an increase in EER limited to 0.07%.

标题:迪夫-MST:差异化的混合风格转移

链接:https://arxiv.org/abs/2407.08889

备注:Accepted to be published at the Proceedings of the 25th International Society for Music Information Retrieval Conference 2024

摘要:混音风格转换通过从参考歌曲推断制作属性来自动生成给定曲目集的多曲目混音。然而,用于混合风格转移的现有系统受到限制,因为它们通常仅在固定数量的轨道上操作,引入伪像,并且以端到端的方式产生混合,而不以传统音频效果为基础,从而禁止可解释性和可控性。为了克服这些挑战,我们介绍了Diff-MST,一个框架,包括一个微分混音控制台,一个Transformer控制器,和一个音频制作风格的损失函数。通过输入原始曲目和参考歌曲,我们的模型可以在可区分的混音控制台内估计音频效果的控制参数,产生高质量的混音并进行事后调整。此外,我们的架构支持任意数量的输入轨道,而无需源标签,从而实现现实世界的应用程序。我们根据强大的基线评估模型的性能,并展示我们的方法,架构设计,定制的音频制作风格损失以及针对给定任务的创新培训方法的有效性。

摘要:Mixing style transfer automates the generation of a multitrack mix for a given set of tracks by inferring production attributes from a reference song. However, existing systems for mixing style transfer are limited in that they often operate only on a fixed number of tracks, introduce artifacts, and produce mixes in an end-to-end fashion, without grounding in traditional audio effects, prohibiting interpretability and controllability. To overcome these challenges, we introduce Diff-MST, a framework comprising a differentiable mixing console, a transformer controller, and an audio production style loss function. By inputting raw tracks and a reference song, our model estimates control parameters for audio effects within a differentiable mixing console, producing high-quality mixes and enabling post-hoc adjustments. Moreover, our architecture supports an arbitrary number of input tracks without source labelling, enabling real-world applications. We evaluate our model’s performance against robust baselines and showcase the effectiveness of our approach, architectural design, tailored audio production style loss, and innovative training methodology for the given task.

标题:从模块化到端到端扬声器扩展

链接:https://arxiv.org/abs/2407.08752

备注:Ph.D. thesis. Successfully defended on 27.06.2024

摘要:发言人日记通常被称为确定录音中“谁在什么时候发言”的任务。直到几年前,所有的竞争方法都是模块化的。基于这个框架的系统在大多数情况下达到了最先进的性能,但在处理重叠语音时遇到了很大的困难。最近,端到端模型的出现,能够用单个模型处理说话人日记化的所有方面,并且在重叠语音方面表现更好,引起了高度的关注。 本论文的写作正是在这两种趋势并存的时期进行的。我们描述了一个基于贝叶斯隐马尔可夫模型的系统,该模型用于对x向量(用神经网络获得的说话者嵌入)进行聚类,称为VBx,该系统在不同的数据集和挑战上表现出卓越的性能。我们评论它的优点和局限性,并评估不同的相关语料库的结果。然后,我们转向端到端神经日志化(EEND)方法。由于需要大量的训练集来训练这些模型,并且缺乏足够数量的手动注释的日志数据,折衷的解决方案包括人工生成训练数据。我们描述了一种方法,用于生成合成数据,类似于真实的对话中的扬声器轮和重叠。我们展示了这种方法如何生成“模拟对话”,比使用先前提出的方法创建“模拟混合”时,训练流行的编码器-解码器吸引子(EEND-EDA)的EEND允许更好的性能。我们还提出了一个新的EEND为基础的模型,我们称之为DiaPer,并表明它可以比EEND-EDA更好地执行,特别是在处理多个扬声器和处理重叠的语音。最后,我们比较了基于VBx和DiaPer系统的各种语料库和评论的优势,每种技术。

摘要:Speaker diarization is usually referred to as the task that determines “who spoke when” in a recording. Until a few years ago, all competitive approaches were modular. Systems based on this framework reached state-of-the-art performance in most scenarios but had major difficulties dealing with overlapped speech. More recently, the advent of end-to-end models, capable of dealing with all aspects of speaker diarization with a single model and better performing regarding overlapped speech, has brought high levels of attention. This thesis is framed during a period of co-existence of these two trends. We describe a system based on a Bayesian hidden Markov model used to cluster x-vectors (speaker embeddings obtained with a neural network), known as VBx, which has shown remarkable performance on different datasets and challenges. We comment on its advantages and limitations and evaluate results on different relevant corpora. Then, we move towards end-to-end neural diarization (EEND) methods. Due to the need for large training sets for training these models and the lack of manually annotated diarization data in sufficient quantities, the compromise solution consists in generating training data artificially. We describe an approach for generating synthetic data which resembles real conversations in terms of speaker turns and overlaps. We show how this method generating “simulated conversations” allows for better performance than using a previously proposed method for creating “simulated mixtures” when training the popular EEND with encoder-decoder attractors (EEND-EDA). We also propose a new EEND-based model, which we call DiaPer, and show that it can perform better than EEND-EDA, especially when dealing with many speakers and handling overlapped speech. Finally, we compare both VBx-based and DiaPer systems on a wide variety of corpora and comment on the advantages of each technique.

标题:利用具有不可分割特征的分解框架进行灵活歌唱声音合成的初步研究

链接:https://arxiv.org/abs/2407.09346

备注:Preliminary investigations

摘要:我们研究的可行性歌唱声音合成(SVS)系统,通过使用一个分解的框架,以提高灵活性,在生成歌唱的声音。由于数据驱动的方法,SVS执行乐谱到波形的映射;然而,直接映射限制了控制,例如只能在标记的歌唱数据集中合成语言或歌手。由于收集带有乐谱标记的大型歌唱数据集是一项昂贵的任务,我们通过分解SVS系统并推断不同的歌唱声音特征来研究另一种方法。我们将SVS系统分解为语言,音高轮廓和合成三个阶段的模块,其中唱歌的声音特征,如语言内容,F0,有声/清音,歌手嵌入和响度直接从音频中推断。通过这个分解的框架,我们表明,我们可以减轻标记数据集的要求,适应不同的语言或歌手,并修补歌唱声音的抒情内容。我们的调查表明,该框架有潜力达到国家的最先进的SVS,即使该模型具有额外的功能和改进的灵活性。我们的调查框架的当前能力的全面分析揭示了研究界可以实现一个灵活的和多功能的SVS系统的方式。

摘要:We investigate the feasibility of a singing voice synthesis (SVS) system by using a decomposed framework to improve flexibility in generating singing voices. Due to data-driven approaches, SVS performs a music score-to-waveform mapping; however, the direct mapping limits control, such as being able to only synthesize in the language or the singers present in the labeled singing datasets. As collecting large singing datasets labeled with music scores is an expensive task, we investigate an alternative approach by decomposing the SVS system and inferring different singing voice features. We decompose the SVS system into three-stage modules of linguistic, pitch contour, and synthesis, in which singing voice features such as linguistic content, F0, voiced/unvoiced, singer embeddings, and loudness are directly inferred from audio. Through this decomposed framework, we show that we can alleviate the labeled dataset requirements, adapt to different languages or singers, and inpaint the lyrical content of singing voices. Our investigations show that the framework has the potential to reach state-of-the-art in SVS, even though the model has additional functionality and improved flexibility. The comprehensive analysis of our investigated framework’s current capabilities sheds light on the ways the research community can achieve a flexible and multifunctional SVS system.

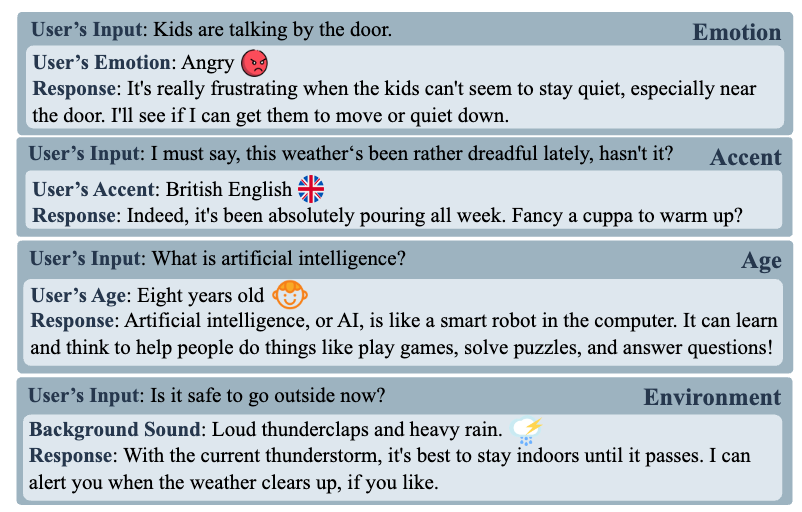

标题:利用多语气大型语言模型进行发音评估

链接:https://arxiv.org/abs/2407.09209

摘要:大型语言模型(LLM)以其强大的会话能力而闻名,被广泛认为是教育领域的特殊工具,特别是在用于语言学习的自动化智能教学系统的背景下。在本文中,我们提出了一个评分系统的基础上LLM,其积极影响的文本相关的评分任务的动机。具体地,语音编码器首先将学习者的语音映射成上下文特征。适配器层然后转换这些特征以与潜在空间中的文本嵌入对齐。评估任务特定的前缀和提示文本被嵌入并与模态适配器层生成的特征连接,使LLM能够预测准确性和流畅性分数。我们的实验表明,建议的评分系统实现了竞争力的结果相比,基线的Speechocean762数据集。此外,我们还进行了消融研究,以更好地了解提示文本和培训策略在建议的评分系统的贡献。

摘要:Large language models (LLMs), renowned for their powerful conversational abilities, are widely recognized as exceptional tools in the field of education, particularly in the context of automated intelligent instruction systems for language learning. In this paper, we propose a scoring system based on LLMs, motivated by their positive impact on text-related scoring tasks. Specifically, the speech encoder first maps the learner’s speech into contextual features. The adapter layer then transforms these features to align with the text embedding in latent space. The assessment task-specific prefix and prompt text are embedded and concatenated with the features generated by the modality adapter layer, enabling the LLMs to predict accuracy and fluency scores. Our experiments demonstrate that the proposed scoring systems achieve competitive results compared to the baselines on the Speechocean762 datasets. Moreover, we also conducted an ablation study to better understand the contributions of the prompt text and training strategy in the proposed scoring system.

标题:使用RefinPaint进行音乐校对:在给定上下文的情况下在哪里以及如何修改作品

链接:https://arxiv.org/abs/2407.09099

摘要:自回归生成Transformers是音乐生成的关键,可以生成连贯的作品,但在人机协作方面面临挑战。我们提出了RefinPaint,一种改进采样过程的迭代技术。它通过使用反馈模型识别较弱的音乐元素来做到这一点,然后通过修复模型通知重新定位的选择。这种双焦点方法不仅有助于机器通过重复循环来提高其自动修复生成的能力,而且还为寻求通过自动校对来改进其构图的人类提供了一个有价值的工具。实验结果表明,RefinPaint在修复和校对任务中的有效性,证明了它对机器和人类创作的音乐的价值。这种方法不仅促进了创造力,而且有助于业余作曲家改进他们的工作。

摘要:Autoregressive generative transformers are key in music generation, producing coherent compositions but facing challenges in human-machine collaboration. We propose RefinPaint, an iterative technique that improves the sampling process. It does this by identifying the weaker music elements using a feedback model, which then informs the choices for resampling by an inpainting model. This dual-focus methodology not only facilitates the machine’s ability to improve its automatic inpainting generation through repeated cycles but also offers a valuable tool for humans seeking to refine their compositions with automatic proofreading. Experimental results suggest RefinPaint’s effectiveness in inpainting and proofreading tasks, demonstrating its value for refining music created by both machines and humans. This approach not only facilitates creativity but also aids amateur composers in improving their work.

标题:增强不完整数据中的情绪识别:一种新型的跨模式对齐、重建和细化框架

链接:https://arxiv.org/abs/2407.09029

摘要:多模态情感识别系统严重依赖模态的完全可用性,当模态数据不完整时,性能会显著下降。为了解决这个问题,我们提出了跨模态对齐,重建和细化(CM-ARR)框架,这是一种创新的方法,它依次进行跨模态对齐,重建和细化阶段,以处理丢失的模态并增强情感识别。该框架利用基于无监督分布的对比学习来对齐异构模态分布,减少差异并有效地建模语义不确定性。重建阶段应用归一化流模型来变换这些对齐的分布并恢复丢失的模态。细化阶段采用基于监督点的对比学习来破坏语义相关性并强调情感特征,从而丰富重构表示的情感内容。IEMOCAP和MSP-IMPROV数据集上的大量实验证实了CM-ARR在缺失和完整模态条件下的优越性能。值得注意的是,在六种缺失模式的情况下,CM-ARR在IEMOCAP数据集上实现了WAR 2.11%和UAR 2.12%的绝对改善,在MSP-IMPROV数据集上分别实现了WAR和UAR 1.71%和1.96%的绝对改善。

摘要:Multimodal emotion recognition systems rely heavily on the full availability of modalities, suffering significant performance declines when modal data is incomplete. To tackle this issue, we present the Cross-Modal Alignment, Reconstruction, and Refinement (CM-ARR) framework, an innovative approach that sequentially engages in cross-modal alignment, reconstruction, and refinement phases to handle missing modalities and enhance emotion recognition. This framework utilizes unsupervised distribution-based contrastive learning to align heterogeneous modal distributions, reducing discrepancies and modeling semantic uncertainty effectively. The reconstruction phase applies normalizing flow models to transform these aligned distributions and recover missing modalities. The refinement phase employs supervised point-based contrastive learning to disrupt semantic correlations and accentuate emotional traits, thereby enriching the affective content of the reconstructed representations. Extensive experiments on the IEMOCAP and MSP-IMPROV datasets confirm the superior performance of CM-ARR under conditions of both missing and complete modalities. Notably, averaged across six scenarios of missing modalities, CM-ARR achieves absolute improvements of 2.11% in WAR and 2.12% in UAR on the IEMOCAP dataset, and 1.71% and 1.96% in WAR and UAR, respectively, on the MSP-IMPROV dataset.

标题:使用非负张量分解和基于吸引子的正规化的音频点形成

链接:https://arxiv.org/abs/2407.08951

备注:Accepted at EUSIPCO2024

摘要:点形成是一种使用多个麦克风阵列的目标说话人提取技术。该方法将波束形成(BF)应用于每个麦克风阵列,并将BF输出之间的公共分量估计为目标源。提出了一种基于非负张量因子分解(NTF)的公共分量提取方法,以提高模型的可解释性和对超参数的鲁棒性。此外,基于吸引子的正则化,以促进自动选择的最佳目标基的NTF。实验结果表明,该方法具有比传统方法更好的光斑形成性能,并显示出一些适合于实际应用的特点。

摘要:Spotforming is a target-speaker extraction technique that uses multiple microphone arrays. This method applies beamforming (BF) to each microphone array, and the common components among the BF outputs are estimated as the target source. This study proposes a new common component extraction method based on nonnegative tensor factorization (NTF) for higher model interpretability and more robust spotforming against hyperparameters. Moreover, attractor-based regularization was introduced to facilitate the automatic selection of optimal target bases in the NTF. Experimental results show that the proposed method performs better than conventional methods in spotforming performance and also shows some characteristics suitable for practical use.

![[本地]GPT-SoVITS模型推理使用教学-AI星球|配音工坊](https://aiaf.cc/wp-content/uploads/2024/08/420164872620240823093402.png)

![[ 双模型]Bert-VITS2|GPT-SOVITS 舌尖上的中国配音 声音配音模型-AI星球|配音工坊](https://aiaf.cc/wp-content/uploads/2024/07/sjszg.jpg)

![[云端]GPT-SoVITS模型推理使用教学-AI星球|配音工坊](https://vip.123pan.cn/1816369016/ymjew503t0n000d7w32y5ezzxloqmsxbDIYPAqDzBIaPApxvAIU=.webp)

请登录后查看评论内容